New global study show almost three-quarters of Aussies are worried about negative outcomes

Australians are less trusting about AI than most countries, according to a new report, with many worried about negative outcomes.

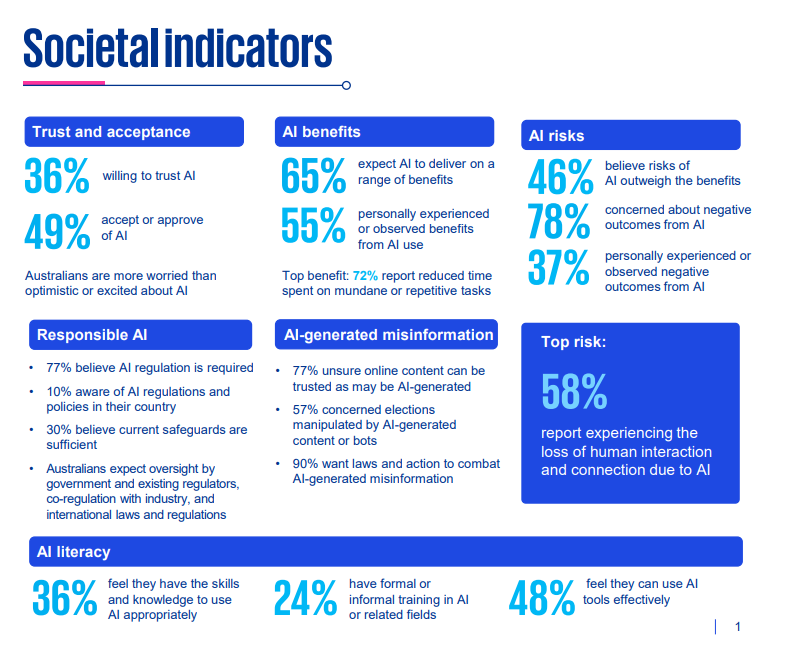

A new global report, released by KPMG, finds half of Aussies are using AI regularly (50%), but only 36% are willing to trust it with almost three-quarters (78%) concerned about negative outcomes.

The Trust, attitudes and use of Artificial Intelligence: a global study 2025, led by Professor Nicole Gillespie, Chair of Trust at Melbourne Business School at the University of Melbourne, is described as “the most comprehensive global study into the public’s trust, use and attitudes towards artificial intelligence – surveying over 48,000 people across 47 countries.

As well as being wary of AI, Australia ranks among the lowest globally on acceptance, excitement and adoption – alongside New Zealand and the Netherlands, the report finds.

“The public’s trust of AI technologies and their safe and secure use is central to acceptance and adoption,” Professor Gillespie says, “Yet our research reveals that 78% of Australians are concerned about a range of negative outcomes from the use of AI systems, and 37% have personally experienced or observed negative outcomes ranging from inaccuracy, misinformation and manipulation, deskilling, and loss of privacy or IP.”

It has also been revealed that Australia has amongst the lowest levels of AI training and education globally, with less than a quarter (25%) having undertaken AI-related training and education – compared with almost 40% globally.

The country also reports a higher-than-average lack of knowledge when it comes to how AI can be used, with 60% saying their knowledge is low compared to 48% across the globe.

“AI literacy consistently emerges in our research as a cross-cutting enabler: it is associated with greater use, trust, acceptance, and critical engagement with AI output, and more benefits from AI use, including better performance in the workplace,” Professor Gillespie says. “An important foundation to building trust and unlocking the benefits of AI is developing literacy through accessible training, workplace support, and public education.”

Two-thirds (65%) of Australians report their employer using AI, with employees reporting increased efficiency as a result, the report finds, stating a greater access to information and innovation. However, the use of AI at work is also creating complex risks for organizations, the findings warn.

Almost half of employees (48%) admit to using AI in ways that contravene company policies, including uploading sensitive company information into free public AI tools like ChatGPT.

Many rely on AI output without evaluating accuracy (57%) and are making mistakes in their work due to AI (59%). A lot of employees also admit to hiding their use of AI at work and presenting AI-generated work as their own.

“Psychological safety around the use of AI in work is critical. People need to feel comfortable to openly share and experiment with how they are using AI in their work and learn from others for greater transparency and accountability," Professor Gillespie says.

Some inappropriate use may stem from a lack of clear organizational guidance. While generative AI tools are the most widely used by Australian employees (71%), only 30% say their organization has a policy on generative AI use.

KPMG Australia Chief Digital Officer John Munnelly said the combination of rapid adoption; low AI literacy and weak governance is creating a complex risk environment.

“Many organizations are rapidly deploying AI without proper consideration being given to the structures needed to ensure transparency, accountability and ethical oversight – all of which are essential ingredients for trust,” he says.

A large majority of Australians were found to be in support of AI regulation, with 77% agreeing it is necessary – with many also saying they expect international laws and regulations as well as oversight by existing governing bodies to ensure AI uptake runs smoothly.

“The research reveals a tension where people are experiencing benefits but also potentially negative impacts from AI. This is fuelling a public mandate for stronger regulation and governance and a growing need for reassurance that AI systems are being used in a safe, secure and responsible way,” Professor Gillespie says.